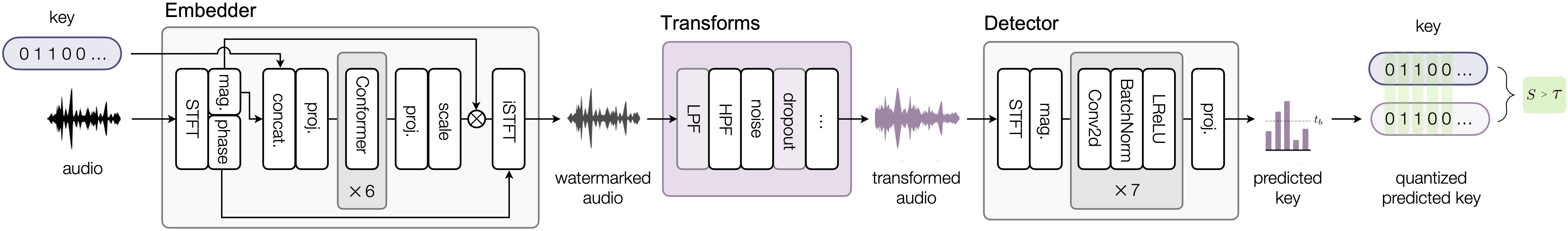

High-quality speech synthesis models may be used to spread misinformation or impersonate voices. Audio watermarking can help combat such misuses by embedding a traceable signature in generated audio. However, existing audio watermarks are not designed for synthetic speech and typically demonstrate robustness to only a small set of transformations of the watermarked audio. To address this, we propose MaskMark, a neural network-based digital audio watermarking technique optimized for speech. MaskMark embeds a secret key vector in audio via a multiplicative spectrogram mask, allowing the detection of watermarked real and synthetic speech segments even under substantial signal-processing or neural network-based transformations. Comparisons to a state-of-the-art baseline on natural and synthetic speech corpora and a human subjects evaluation demonstrate MaskMark's superior robustness in detecting watermarked speech while maintaining high perceptual transparency.

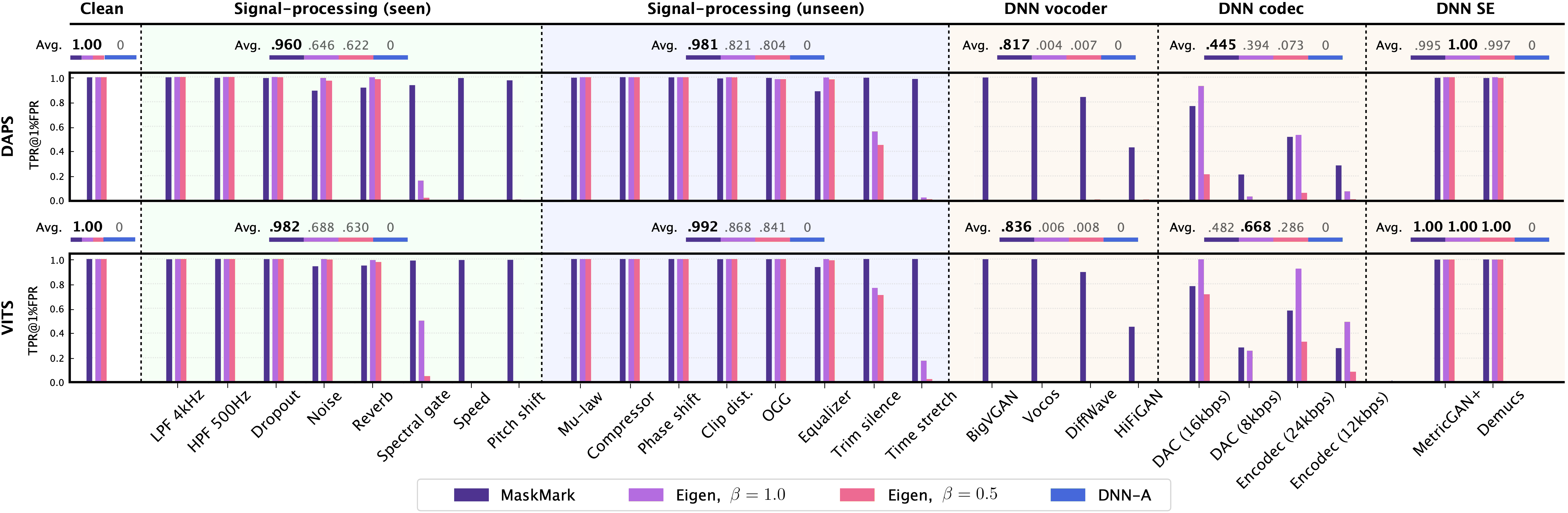

When compared to state-of-the-art signal-processing and neural network-based watermarks, MaskMark is better at distinguishing watermarked audio while maintaining a low false-positive rate. We apply various audio transformations prior to watermark detection and measure the true-positive rate at a fixed false-positive rate of 1% (TPR@1%FPR). MaskMark achieves competitive or better robustness under every evaluated transformation, and performs significantly better in the average and worst case over most broad transformation types. MaskMark demonstrates strong robustness to transformations for which other methods fail completely at low false positive rates (time-stretching, neural vocoding), and generalizes far outside its relatively small training dataset and limited training distribution of signal-processing transformations.

We provide audio comparisons between the proposed MaskMark watermark, the Eigen watermark of Tai & Mansour (2019), and the DNN-A watermark of Pavlović et al. (2022). For reference, we include the original audio (Original).

DAPS-clean subset (44.1 kHz)

| Utterance | Original | MaskMark | Eigen (β=0.5) | Eigen (β=1.0) | DNN-A |

|---|---|---|---|---|---|

| 0 | |||||

| 1 | |||||

| 2 | |||||

| 3 | |||||

| 4 | |||||

| 5 | |||||

| 6 | |||||

| 7 | |||||

| 8 | |||||

| 9 |

VITS generations (22.05 kHz)

| Utterance | Original | MaskMark | Eigen (β=0.5) | Eigen (β=1.0) | DNN-A |

|---|---|---|---|---|---|

| 0 | |||||

| 1 | |||||

| 2 | |||||

| 3 | |||||

| 4 | |||||

| 5 | |||||

| 6 | |||||

| 7 | |||||

| 8 | |||||

| 9 |